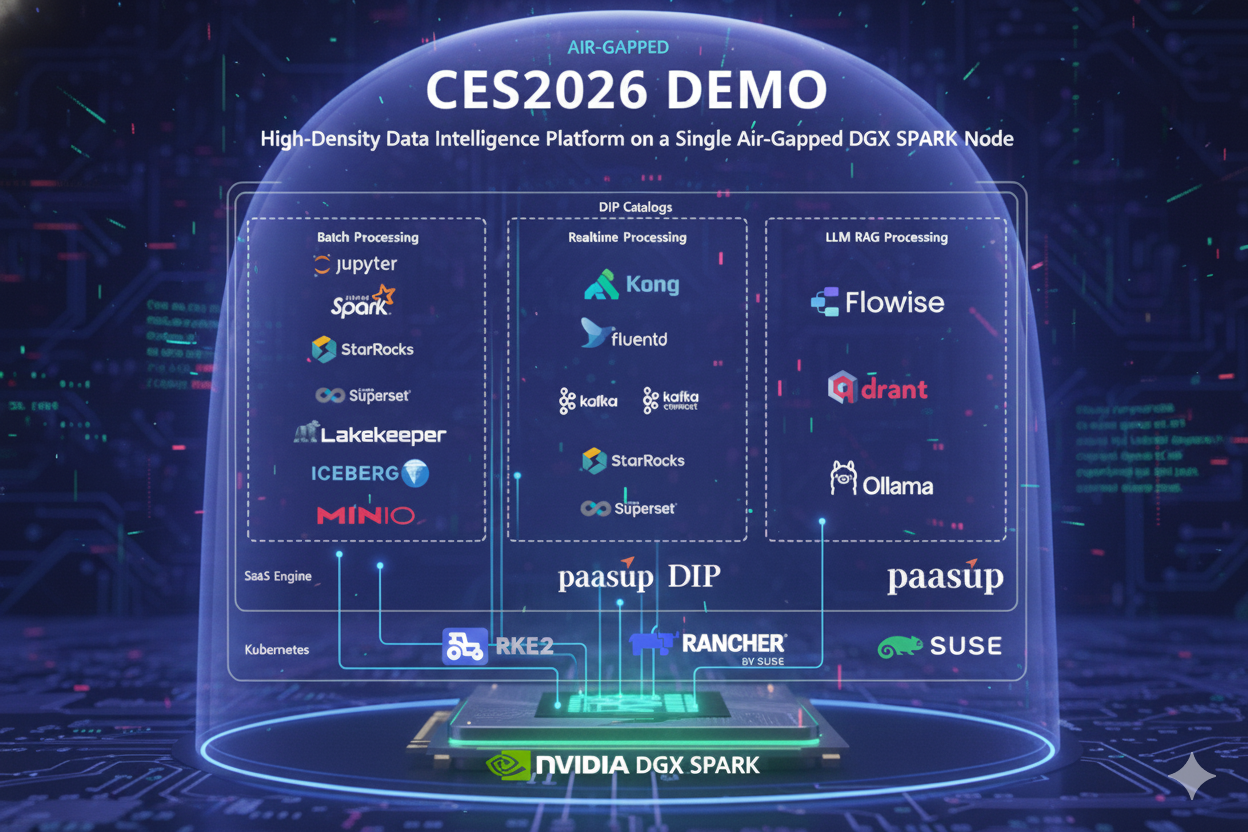

CES2026 Demo - High-Density Data Intelligence Platform on a Single Air-Gapped DGX SPARK Node

PAASUP DIP demonstrates a high-density platform on a single air-gapped DGX SPARK node, integrating batch, real-time, and LLM RAG via SaaS-style management and RKE2/Rancher orchestration.

Modern data platforms are typically designed assuming infinite cloud resources and constant internet connectivity. However, in environments where security isolation (Air-Gapped) is non-negotiable—such as defense, national intelligence, or core manufacturing—traditional cloud-native architectures fall short.

1. The Challenge: Why a Single DGX SPARK Node?

This CES 2026 demo is a technical proof-of-concept (PoC) designed for the following extreme constraints:

- Zero Connectivity: 100% physical disconnection from external networks.

- Resource Consolidation: All workloads are integrated into a single high-performance NVIDIA DGX SPARK server.

- Workload Diversity: Achieving the coexistence of batch, real-time, and LLM inference engines without resource interference.

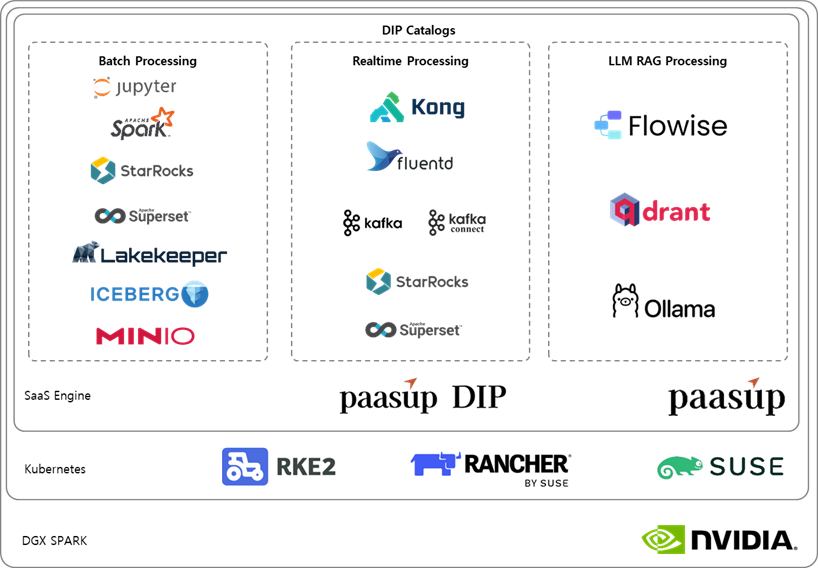

2. PAASUP DIP: SaaS-based Integrated Operation and Orchestration

The core value of PAASUP DIP lies in its ability to integrate and manage a complex open-source catalog through a SaaS-style interface. Users can provision necessary data services instantly without having to manage the underlying infrastructure complexity.

-

SaaS Engine-based Management: Even in an air-gapped environment, the PAASUP SaaS engine provides the operational convenience to centrally control and deploy batch, real-time analytics, and AI services.

-

Kubernetes Orchestration (RKE2 & Rancher):

-

RKE2: A security-hardened K8s distribution optimized for air-gapped installations.

-

SUSE Rancher: Provides unified monitoring and lifecycle management for all containerized data engines.

-

NVIDIA DGX SPARK Infrastructure: Based on the powerful computing resources provided by a single DGX SPARK node, stable performance is guaranteed even when multiple data engines run simultaneously.

3. Key Demo Scenario Analysis

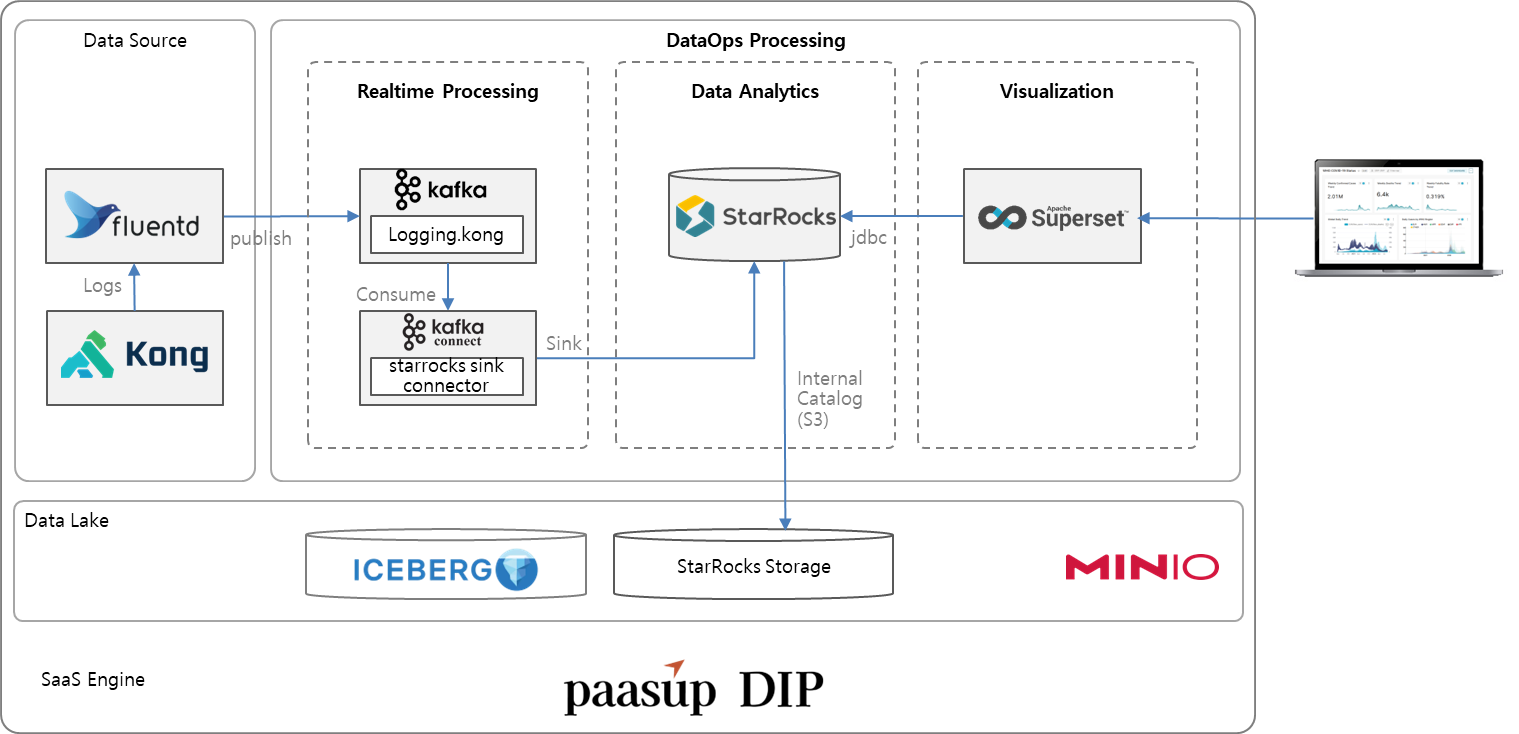

A. Single-Node Real-time Streaming

To collect and process system log data within the isolated environment, Kong and Fluentd are deployed as frontend collectors.

- Ingestion & Queuing: Data is queued through Kafka.

- Storage & Analytics: StarRocks syncs data via Kafka connectors, providing OLAP results in milliseconds.

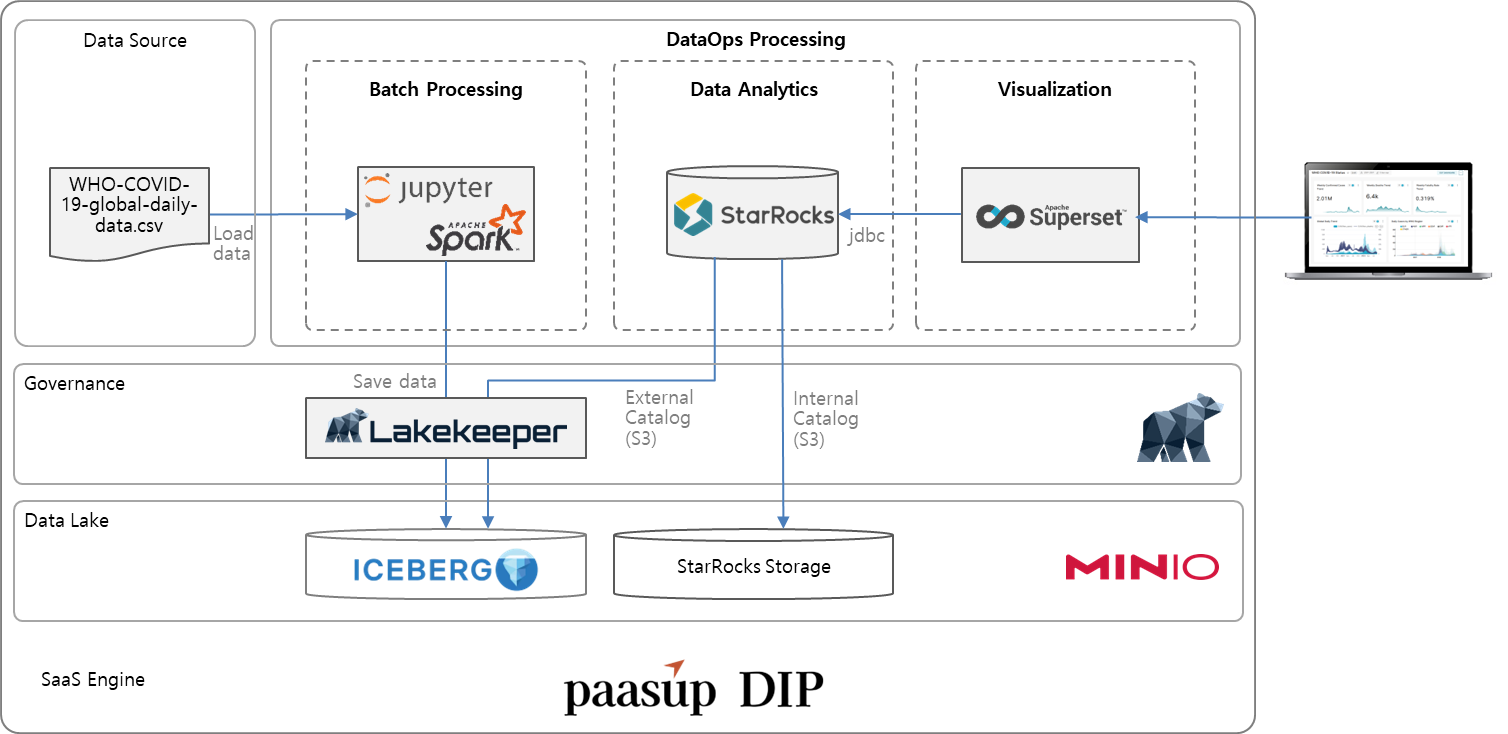

B. Secure Batch Analytics & Governance

For secure large-scale data refinement, the platform provides a Spark and Jupyter environment.

- Table Format: Uses Apache Iceberg for performance and flexibility.

- Governance: Lakekeeper manages data permissions and catalogs, demonstrating enterprise-grade governance in isolation.

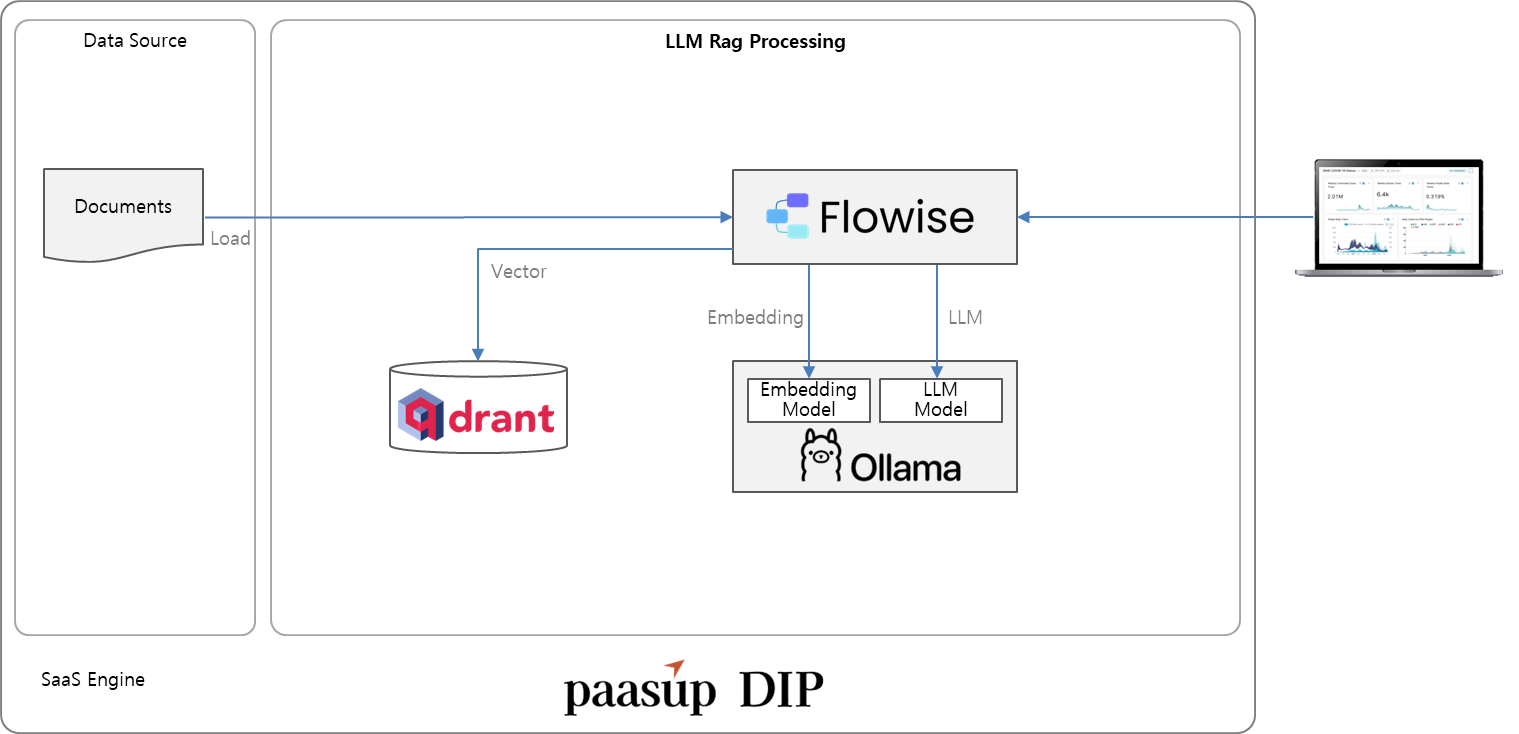

C. Air-Gapped Intelligence Services (LLM RAG)

The intelligent service runs Ollama to execute local LLMs and embedding models without internet.

- Local LLM & Embedding: Ollama performs on-device inference and embedding.

- Contextual Search: Flowise and Qdrant (Vector DB) combine to create a secure RAG pipeline.

4. Beyond the Demo: A Comprehensive 20+ Tool Catalog

While the CES 2026 demo uses a curated 'subset catalog,' PAASUP DIP is backed by over 20 proven technologies.

- AI Serving: Supports vLLM, kserve, and NVIDIA NIM for high-performance deployment.

- LLM Lifecycle: NVIDIA NeMo support allows for model fine-tuning.

- Stream Processing: Apache Flink is included for complex event processing.

5. Conclusion: Optimized Data Engines for Industrial Use

PAASUP DIP is a model for integrating the latest data technologies into the most restrictive environments. Whether your environment is cloud, on-premise, or 100% air-gapped, paasup delivers an optimized engine tailored to your needs.